As you start learning Python programming, you’ll find many ways to work with and use data. One important area you’ll want to learn about is machine learning. Machine learning lets you make guesses by putting data into complex math formulas. There are many ways to use machine learning, so it’s a good skill to have. In this guide, we’ll teach you the basics of machine learning and how to start using it with Python. Python has a lot of tools that make it easy to start with machine learning. We’ll be using the Scikit-learn, Pandas, and Matplotlib tools in this guide.

The Data Set

Before we start with machine learning, let’s look at a data set and think about what we might want to guess. The data is from BoardGameGeek and has information on 80,000 board games. Here’s an example of a single-board game on the site. This data was kindly put into a CSV file by Sean Beck, and you can download it here. The data has several pieces of information about each board game. Here are some of the interesting ones:

Introduction to Pandas

The first step is to read the data and print some quick stats. To do this, we’ll use the Pandas tool. Pandas has data structures and tools that make working with data in Python easier. The most common data structure is called a dataframe. A dataframe is like a matrix, so we’ll talk about what a matrix is before we talk about dataframes. Our data file looks like this (we took out some columns to make it easier to look at):

id,type,name,yearpublished,minplayers,maxplayers,playingtime

12333,boardgame,Twilight Struggle,2005,2,2,180

120677,boardgame,Terra Mystica,2012,2,5,150

This is in a CSV format, or comma-separated values, which you can read more about here. Each row of the data is a different board game, and commas separate different pieces of information about each board game. The first row is the header row and tells us each piece of information. The whole set of one piece of information, going down, is a column. We can think of a CSV file as a matrix:

We took out some of the columns here to make it easier to look at, but you can still get a sense of how the data looks. A matrix is a two-dimensional data structure, with rows and columns. We can get to elements in a matrix by their position. The first row starts with id, the second row starts with 12333, and the third row starts with 120677. The first column is id, the second is type, and so on. In Python, we can use matrices with the NumPy tool. But matrices have some downsides. You can’t easily get to columns and rows by name, and each column has to be the same type of data. This means that we can’t store our board game data in a matrix – the name column has words, and the yearpublished column has numbers, so we can’t store them both in the same matrix. A dataframe, on the other hand, can have different types of data in each column. It also has many built-in features for looking at data, like looking up columns by name. Pandas gives us these features and makes working with data much easier.

Importing Our Data

First, we’ll bring in our data from a CSV file and put it into a Pandas dataframe. We’ll use the read_csv function for this.

# Bring in the pandas library.

import pandas # Load the data.

games = pandas.read_csv("board_games.csv")

# Show the column names in games.

print(games.columns)

The above code loads the data and displays all the column names. Any columns not listed above should be straightforward to understand.

print(games.shape)

We can also check the shape of the data, which tells us it has 81312 rows, or games, and 20 columns, or pieces of information for each game.

Examining Our Target Variables

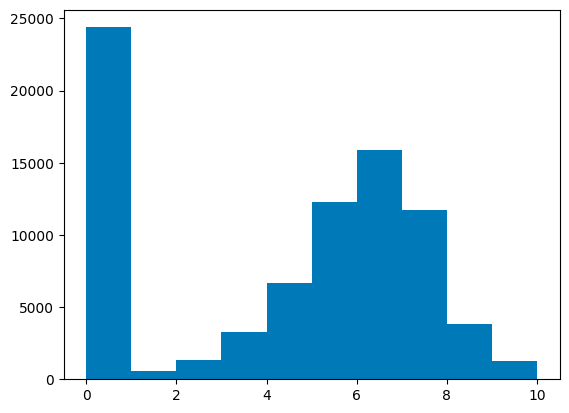

It could be fun to try and guess the average score a person would give to a brand new, not yet out, board game. This is stored in the average_rating column, which is the average of all the user ratings for a board game. Predicting this column could be handy for board game makers who are thinking about what kind of game to make next. We can get a column in a dataframe with Pandas using games["average_rating"]. This will pull out a single column from the dataframe. Let’s make a histogram of this column so we can see how the ratings are spread out. We’ll use Matplotlib to make the picture. Matplotlib is the main tool for making pictures you’ll find as you learn Python programming, and most other plotting libraries, like seaborn and ggplot2, are built on top of Matplotlib. We bring in Matplotlib’s plotting functions with import matplotlib.pyplot as plt. We can then make and show pictures.

# Bring in matplotlib

import matplotlib.pyplot as plt

# Make a histogram of all the ratings in the average_rating column.

plt.hist(games["average_rating"])

# Show the picture.

plt.show()

What we see here is that there are quite a few games with a 0 rating. There’s a fairly normal spread of ratings, with some right skew, and a mean rating around 6 (if you remove the zeros).

Looking at the 0 Ratings

Are there really so many bad games that were given a 0 rating? Or is something else happening? We’ll need to look at the data bit more to check on this. With Pandas, we can select parts of data using Boolean series (vectors, or one column/row of data, are known as series in Pandas). Here’s an example:

games[games["average_rating"] == 0]

The code above will make a new dataframe, with only the rows in games where the value of the average_rating column equals 0. We can then index the resulting dataframe to get the values we want. There are two ways to index in Pandas – we can index by the name of the row or column, or we can index by position. Indexing by names looks like games["average_rating"] – this will return the whole average_rating column of games. Indexing by position looks like games.iloc[0] – this will return the whole first row of the dataframe. We can also pass in multiple index values at once – games.iloc[0,0] will return the first column in the first row of games. Read more about Pandas indexing here.

# Print the first row of all the games with zero scores.

# The .iloc method on dataframes allows us to index by position.

print(games[games["average_rating"] == 0].iloc[0])

# Print the first row of all the games with scores greater than 0.

print(games[games["average_rating"] > 0].iloc[0])

This shows us that the main difference between a game with a 0 rating and a game with a rating above 0 is that the 0 rated game has no reviews. The users_rated column is 0. By filtering out any board games with 0 reviews, we can remove much of the noise.

Filtering Out Games Without Reviews

# Remove any rows without user reviews.

games = games[games["users_rated"] > 0]

# Remove any rows with missing values.

games = games.dropna(axis=0)

We just got rid of all the rows without user reviews. At the same time, we also removed any rows with missing values. Many machine learning algorithms can’t handle missing values, so we need a way to deal with them. One common method is to filter them out, but this means we might lose valuable data. There are other techniques for handling missing data, which you can find here.

Grouping Games

We’ve noticed that there might be distinct groups of games. One group (which we just removed) was the set of games without reviews. Another group could be a set of highly rated games. One way to learn more about these groups of games is a technique called clustering. Clustering helps you find patterns in your data by grouping similar rows (in this case, games) together. We’ll use a type of clustering called k-means clustering. Scikit-learn has a great implementation of k-means clustering that we can use. Scikit-learn is the main machine learning library in Python, and contains implementations of most common algorithms.

# Import the kmeans clustering model.

from sklearn.cluster import KMeans

# Initialize the model with 2 parameters -- number of clusters and random state.

kmeans_model = KMeans(n_clusters=5, random_state=1)

# Get only the numeric columns from games.

good_columns = games._get_numeric_data()

# Fit the model using the good columns.

kmeans_model.fit(good_columns)

# Get the cluster assignments.

labels = kmeans_model.labels_

To use the clustering algorithm in Scikit-learn, we first initialize it with two parameters — n_clusters defines how many clusters of games we want, and random_state is a random seed we set to reproduce our results later. We then only get the numeric columns from our dataframe. Most machine learning algorithms can’t directly operate on text data, and can only take numbers as input. Getting only the numeric columns removes ‘type’ and ‘name’, which aren’t usable by the clustering algorithm. Finally, we fit our k-means model to our data, and get the cluster assignment labels for each row.

Plotting Clusters

Now that we have cluster labels, let’s plot the clusters. One challenge is that our data has many columns — it’s hard to visualize things in more than 3 dimensions. So we’ll have to reduce the dimensionality of our data, without losing too much information. One way to do this is a technique called principal component analysis, or PCA. PCA takes multiple columns, and turns them into fewer columns while trying to preserve the unique information in each column.

# Import the PCA model.

from sklearn.decomposition import PCA

# Create a PCA model.

pca_2 = PCA(2)

# Fit the PCA model on the numeric columns from earlier.

plot_columns = pca_2.fit_transform(good_columns)

# Make a scatter plot of each game, shaded according to cluster assignment.

plt.scatter(x=plot_columns[:,0], y=plot_columns[:,1], c=labels)

# Show the plot. plt.show()

We first initialize a PCA model from Scikit-learn. PCA isn’t a machine learning technique, but Scikit-learn also contains other models that are useful for performing machine learning. We then turn our data into 2 columns, and plot the columns. When we plot the columns, we shade them according to their cluster assignment. The plot shows us that there are 5 distinct clusters. We could dive more into which games are in each cluster to learn more about what factors cause games to be clustered.

Deciding What to Predict

Before we jump into machine learning, we need to decide two things — how we’re going to measure error and what we’re going to predict. We thought earlier that average_rating might be good to predict, and our exploration reinforces this idea. There are a variety of ways to measure error. Generally, when we’re doing regression and predicting continuous variables, we’ll need a different error metric than when we’re performing classification and predicting discrete values. For this, we’ll use mean squared error – it’s easy to calculate and simple to understand. It shows us how far, on average, our predictions are from the actual values.

Finding Correlations

Now that we want to predict average_rating, let’s see what columns might be interesting for our prediction. One way is to find the correlation between average_rating and each of the other columns. This will show us which other columns might predict average_rating the best. We can use the corr method on Pandas dataframes to easily find correlations.

games.corr()["average_rating"]

id 0.304201

yearpublished 0.108461

minplayers -0.032701

maxplayers -0.008335

playingtime 0.048994

minplaytime 0.043985

maxplaytime 0.048994

minage 0.210049

users_rated 0.112564

average_rating 1.000000

bayes_average_rating 0.231563

total_owners 0.137478

total_traders 0.119452

total_wanters 0.196566

total_wishers 0.171375

total_comments 0.123714

total_weights 0.109691

average_weight 0.351081

Name: average_rating, dtype: float64

We see that the average_weight and id columns correlate best with the rating. ids are presumably assigned when the game is added to the database, so this likely indicates that games created later score higher in the ratings. Maybe reviewers were not as nice in the early days, or older games were of lower quality. average_weight indicates the “depth” or complexity of a game, so it may be that more complex games are reviewed better.

Choosing the Right Predictor Columns

Before we start predicting, let’s select only the relevant columns for training our algorithm. We’ll want to exclude certain columns that aren’t numeric. We’ll also want to exclude columns that can only be computed if you already know the average rating. Including these columns will defeat the purpose of the classifier, which is to predict the rating without any prior knowledge. Using columns that can only be computed with knowledge of the target can lead to overfitting, where your model is good in a training set, but doesn’t generalize well to future data.

# Get all the columns from the dataframe.

columns = games.columns.tolist()

# Filter the columns to remove ones we don't want.

columns = [c for c in columns if c not in ["bayes_average_rating", "average_rating", "type", "name"]]

# Store the variable we'll be predicting on.

target = "average_rating"

Splitting the Data into Training and Test Sets

We want to measure the accuracy of our algorithm using our error metrics. However, evaluating the algorithm on the same data it has been trained on will lead to overfitting. To prevent overfitting, we’ll train our algorithm on a set consisting of 80% of the data and test it on another set consisting of 20% of the data.

# Import a convenience function to split the sets.

from sklearn.model_selection import train_test_split

# Generate the training set. Set random_state to be able to replicate results.

train = games.sample(frac=0.8, random_state=1)

# Select anything not in the training set and put it in the testing set.

test = games.loc[~games.index.isin(train.index)]

# Print the shapes of both sets.

print(train.shape)

print(test.shape)

Applying Linear Regression

Linear regression is a commonly used machine learning algorithm. It predicts the target variable using linear combinations of the predictor variables. We can use the linear regression implementation in Scikit-learn.

# Import the linear regression model.

from sklearn.linear_model import LinearRegression

# Initialize the model class.

model = LinearRegression()

# Fit the model to the training data.

model.fit(train[columns], train[target])

Predicting and Calculating Error

After training our model, we can use it to make predictions on unseen data. We’ll use the model to predict the average rating of board games in our test set.

# Import the scikit-learn function to compute error.

from sklearn.metrics import mean_squared_error

# Generate our predictions for the test set.

predictions = model.predict(test[columns])

# Compute error between our test predictions and the actual values.

mean_squared_error(predictions, test[target])

Trying a Different Model

One of the great things about Scikit-learn is that it allows us to try more powerful algorithms very easily. One such algorithm is called random forest. The random forest algorithm can find nonlinearities in data that a linear regression wouldn’t be able to pick up on.

# Import the random forest model.

from sklearn.ensemble import RandomForestRegressor

# Initialize the model with some parameters.

model = RandomForestRegressor(n_estimators=100, min_samples_leaf=10, random_state=1)

# Fit the model to the data.

model.fit(train[columns], train[target])

# Make predictions.

predictions = model.predict(test[columns])

# Compute the error.

mean_squared_error(predictions, test[target])

Further Exploration

We’ve managed to go from data in CSV format to making predictions. Here are some ideas for further exploration:

- Try a support vector machine.

- Try combining multiple models to create better predictions.

- Try predicting a different column, such as

average_weight. - Generate features from the text, such as the length of the game’s name, the number of words, etc.

The Jupyter Notebook for this tutorial is available for download here.